ryzen 5 3600 rx 580 fortnite

ryzen 5 3600 rx 580 fortniteOn low settings amd ryzen 5 3600 can achieve from 258.1 fps up to 640.9 fps , with average around 375.2 fps.; Gtx 1080 ti fortnite battle royale benchmark with amd ryzen 5 3600 at ultra quality ? Compare ryzen 5 3600 with:

As promised in our day-one Radeon RX 5500 XT review, today we're taking a look at the 4GB version to see how it performs and more importantly how it compares to not only 8GB models, but also other graphics cards that compete in the same price range like the RX 580. On hand for testing we have the Sapphire Pulse RX 5500 XT 4G and Pulse RX 5500 XT 8G, both of which come in cute little boxes.

What's great about using these cards for a 4GB vs. 8GB test, is that with the exception of memory capacity, they're identical, so there are no other factors to account for such as cooler quality or operating clock speeds that can influence the results.

The Sapphire Pulse 5500 XT's are also top notch cards that retail for about $10 over the MSRP, a reasonable markup given they feature a factory overclock, a well designed cooler with an excellent aluminium backplate, and they even come with a dual BIOS. They're also relatively compact dual-slot cards that measure just 233mm long.

The test system used includes a Core i9-9900K clocked at 5 GHz with 16GB of DDR4-3400 memory. Same as our previous review, we have 12 games to look at, all tested at 1080p and 1440p resolutions using medium to high quality presets or settings, so let's get into the results.

Benchmarks

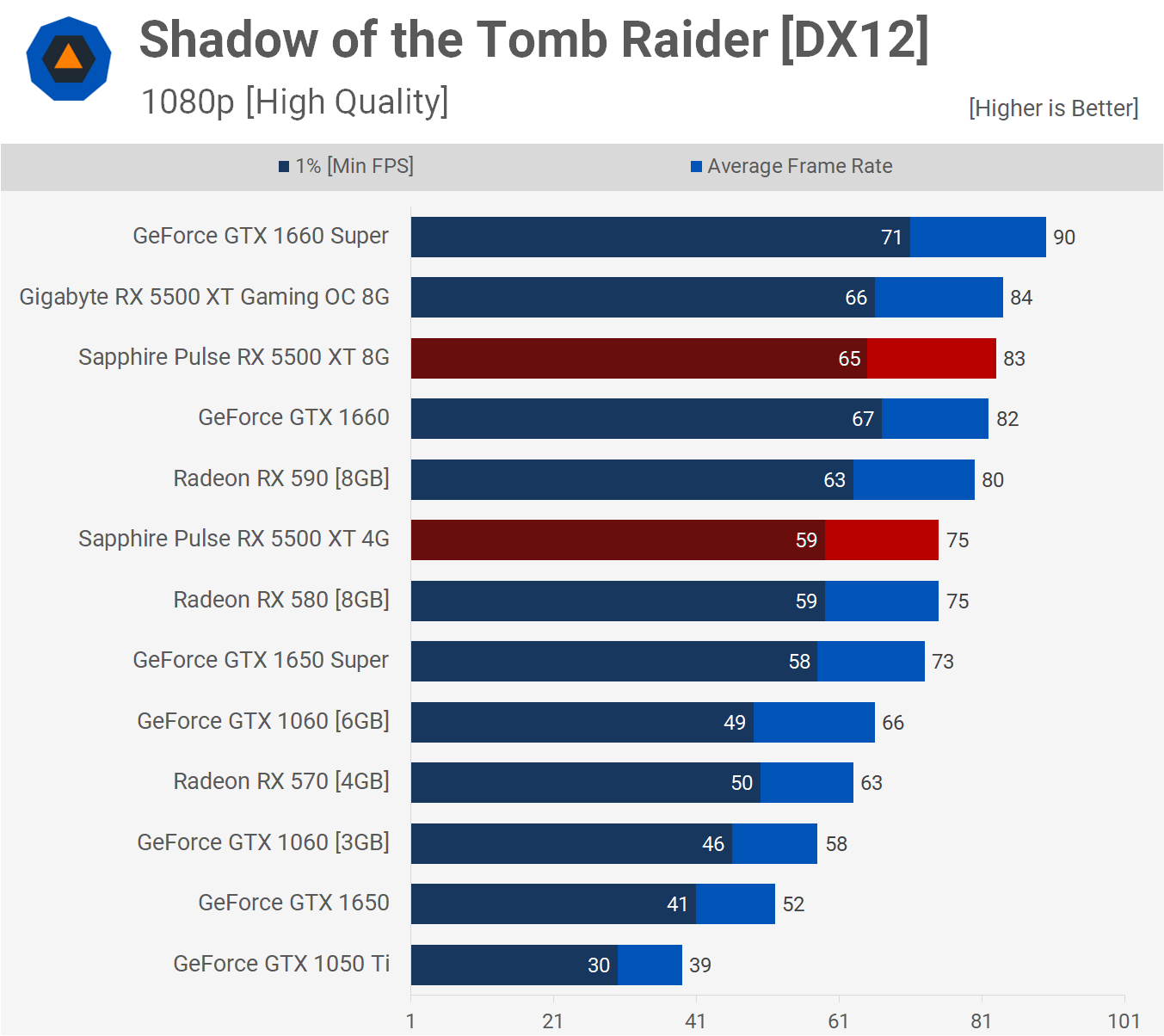

First up we have Shadow of the Tomb Raider and here the 4GB 5500 XT was 10% slower than the 8GB version when looking at the average frame rate and that meant it was no faster than the RX 580 and 6% slower than the RX 590. It was also on par with the GTX 1650 Super, averaging just 2 fps more for a mere 3% performance increase.

Increasing the resolution to 1440p doesn't change much. The 4GB version was 11% slower this time and again that placed it on par with the RX 580 and slightly ahead of the GTX 1650 Super.

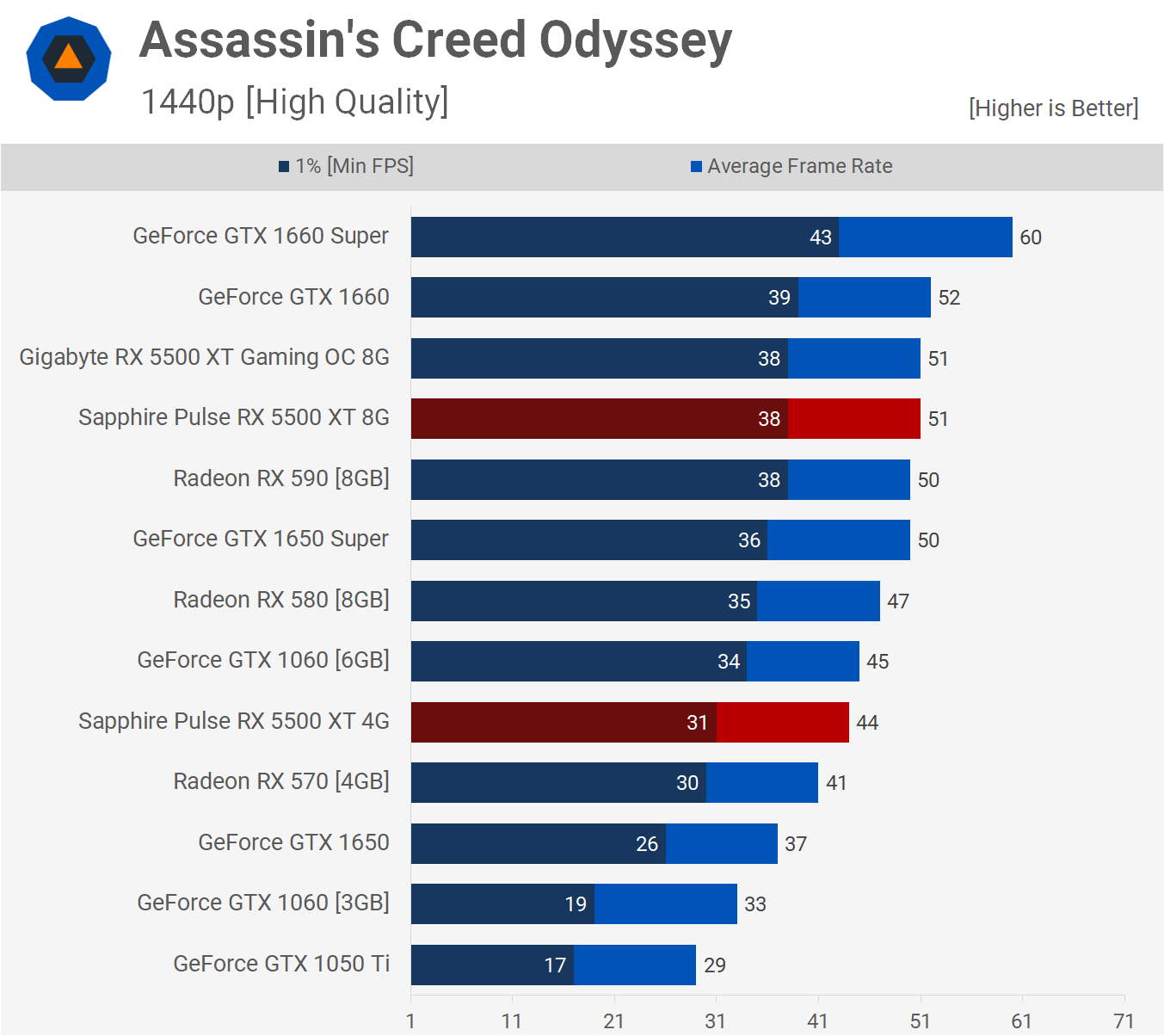

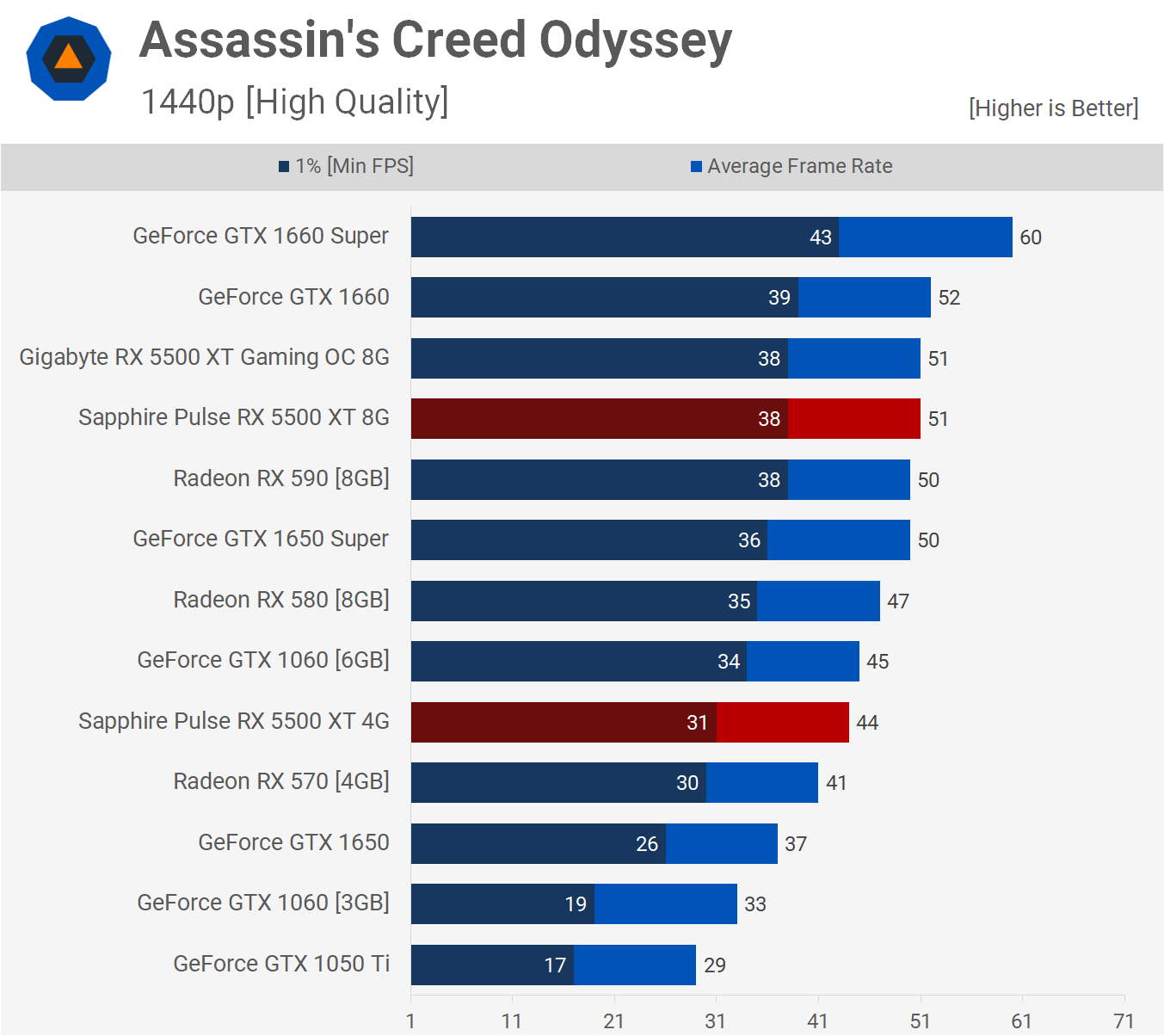

The performance drop off for the 4GB version in Assassin's Creed Odyssey at 1080p is pretty minimal, that said performance wise it's also comparable to the GTX 1650 Super.

Interestingly, the move to 1440p does see a significant reduction in performance for the 4GB 5500 XT, and that's interesting because the 1650 Super doesn't appear to suffer nearly as much. As a result, the 4GB 5500 XT was 12% slower than the 1650 Super. Basically under these conditions you're looking at RX 570 level of performance.

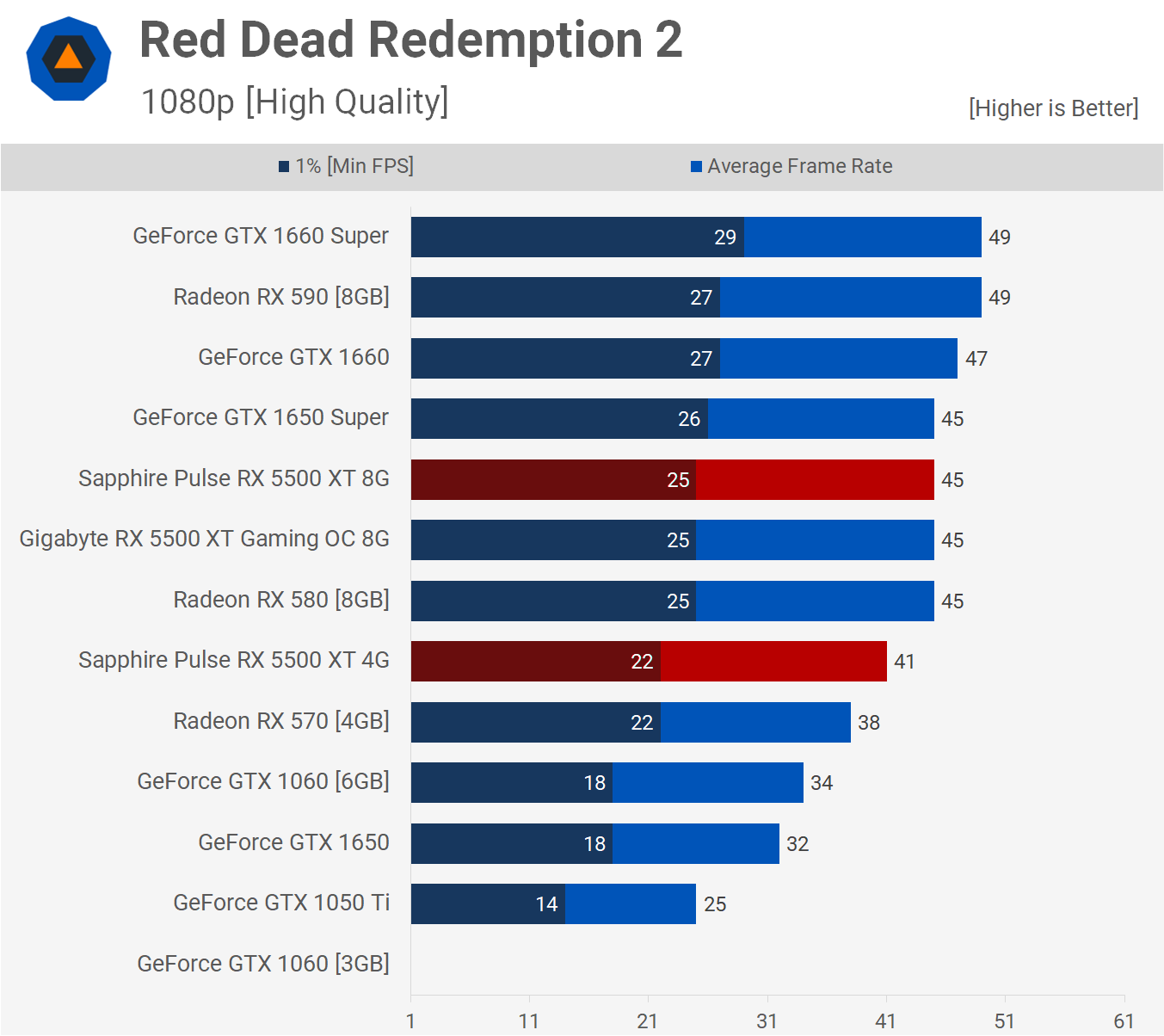

We'll have to update the Red Dead Redemption 2 test for these lower-end GPUs with medium to low quality settings in the near future, high is just too much for this class of GPUs. At 1080p the 4GB 5500 XT sees a 9% performance hit, which isn't great and that also makes it 9% slower than the 1650 Super.

Although the 4GB 5500 XT also takes a hit at 1440p, we think the biggest performance bottleneck here are the GPUs themselves, so in other words the GPUs are choking before VRAM capacity becomes a serious issue.

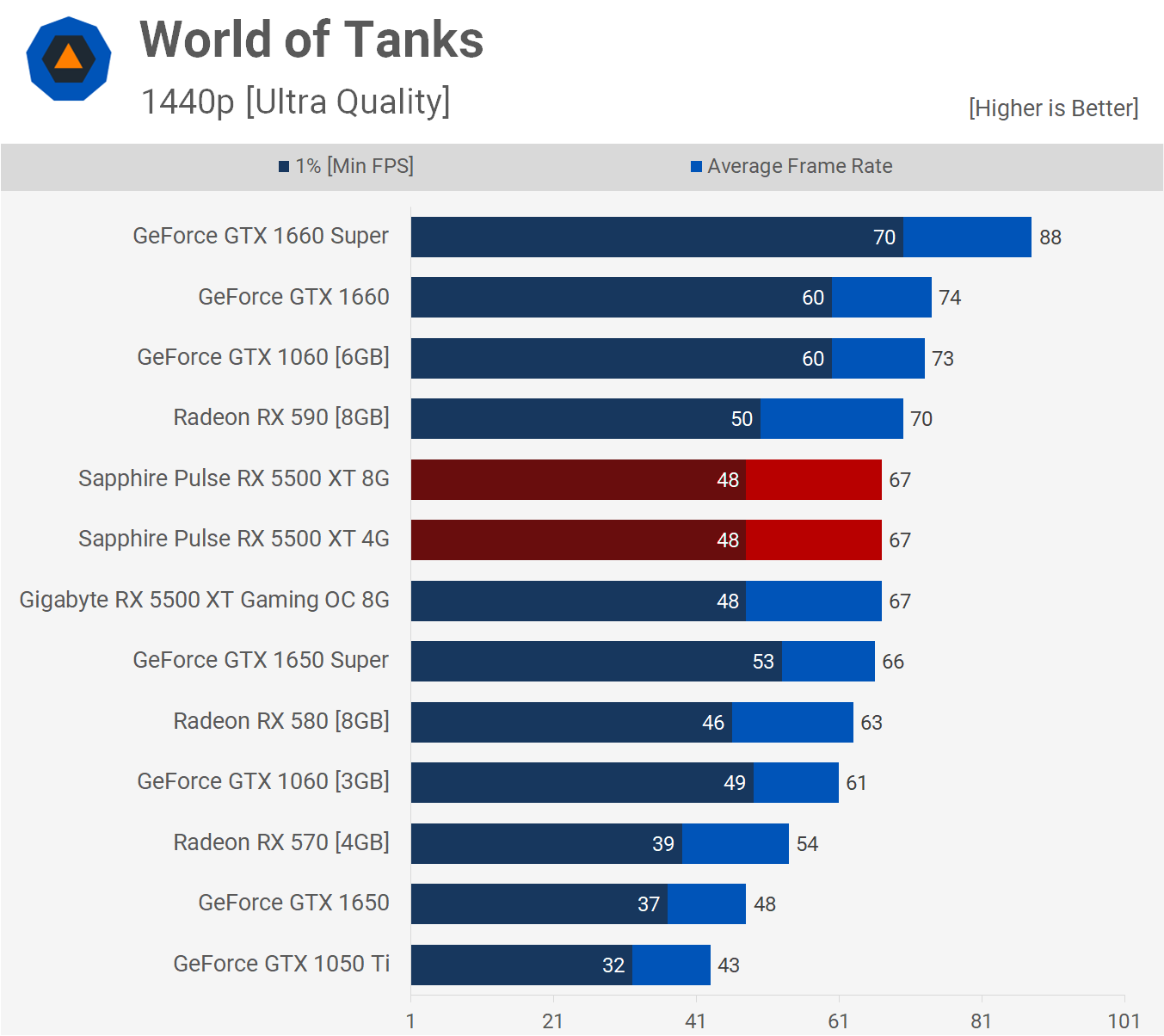

Moving on to World of Tanks, this game only allocates around 3GB of VRAM at 1440p using the maximum in-game quality settings with the HD client version. Thus at 1080p we see no difference between the 4GB and 8GB versions of the 5500 XT.

It's the same story at 1440p, with up to just 3GB of memory allocated, both the 4GB and 8GB versions deliver the same level of performance.

The 4GB 5500 XT drops a few frames when compared to the 8GB model in Far Cry New Dawn, but overall performance at 1080p using the second highest quality preset is still very good.

Much the same is seen at 1440p. We're using the second highest quality preset and despite that the 4GB 5500 XT is still able to average just over 60fps.

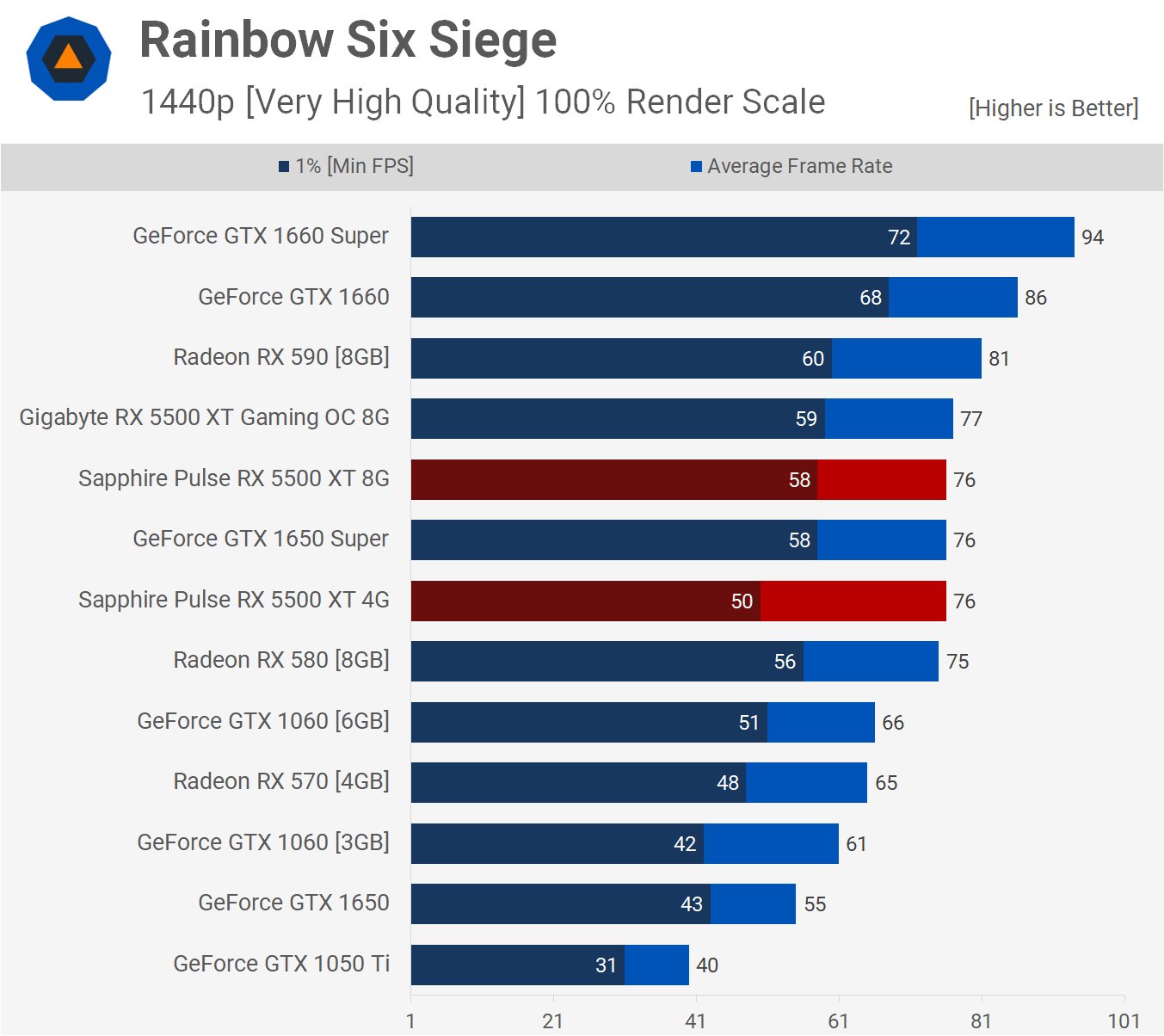

We're not playing Rainbow Six Siege with the highest visual quality settings, rather the second quality preset with a manual render scale of 100% set. This saw the 4 and 8 GB 5500 XT models deliver the same 128 fps on average at 1080p.

Even at 1440p we see the same average frame rate. However, it's the 1% low performance that falls away with the 4GB model and while it's still very playable, this cut down version wasn't quite as smooth overall.

Next up we have Star Wars Jedi: Fallen Order and at 1080p using the high quality preset which is one step below the max 'Ultra' setting, we see no difference in performance between the 4GB and 8GB versions of the 5500 XT.

At 1440p performance we observe the same performance. In this game the Radeon 5500 XT delivered basically the same level of performance as the 1650 Super and even the 3GB GTX 1060.

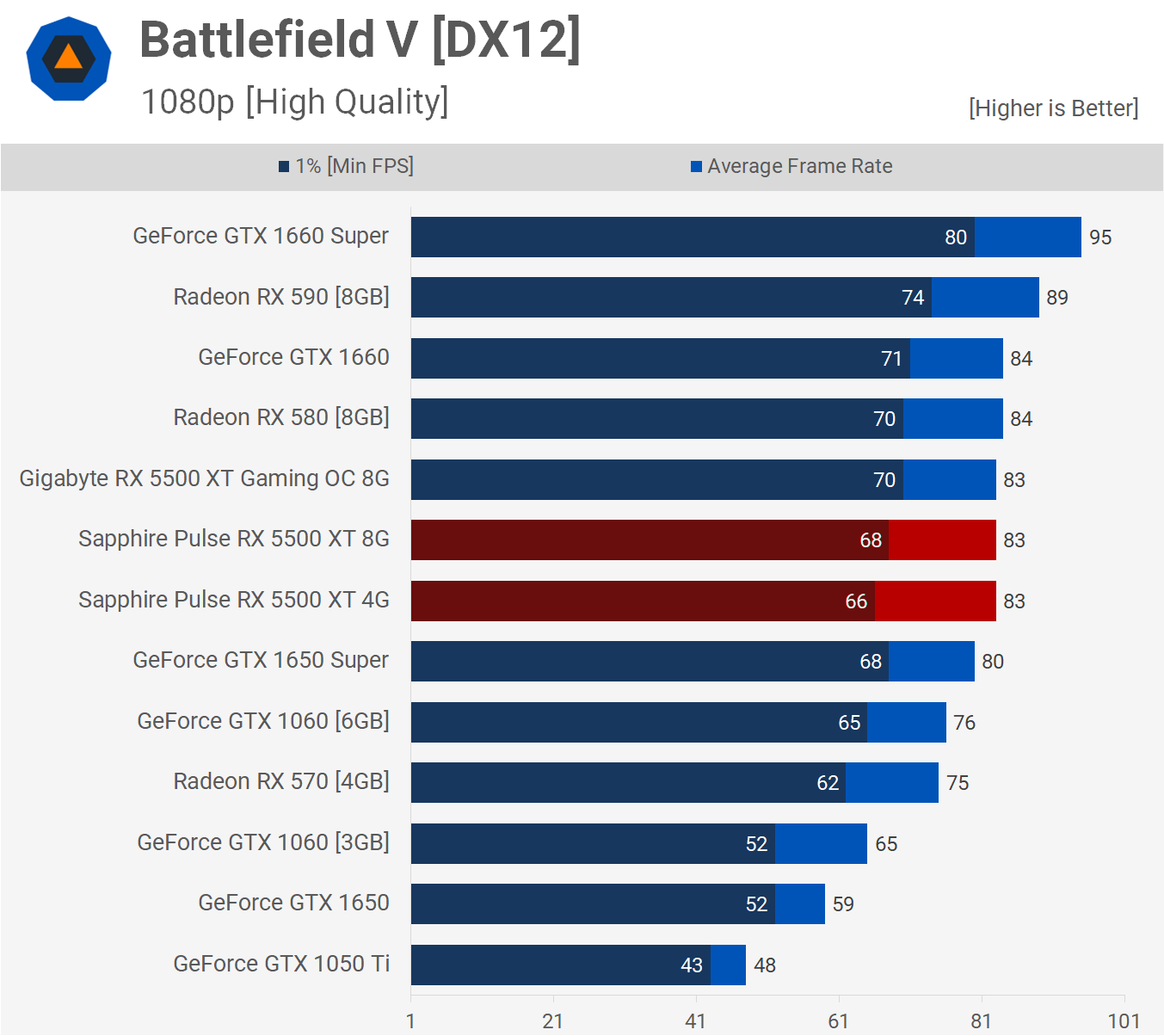

In Battlefield V the 4GB 5500 XT was able to keep pace with the 8GB version at 1080p, both rendering 83 fps on average, which is essentially the same level of performance you'll receive from the RX 580.

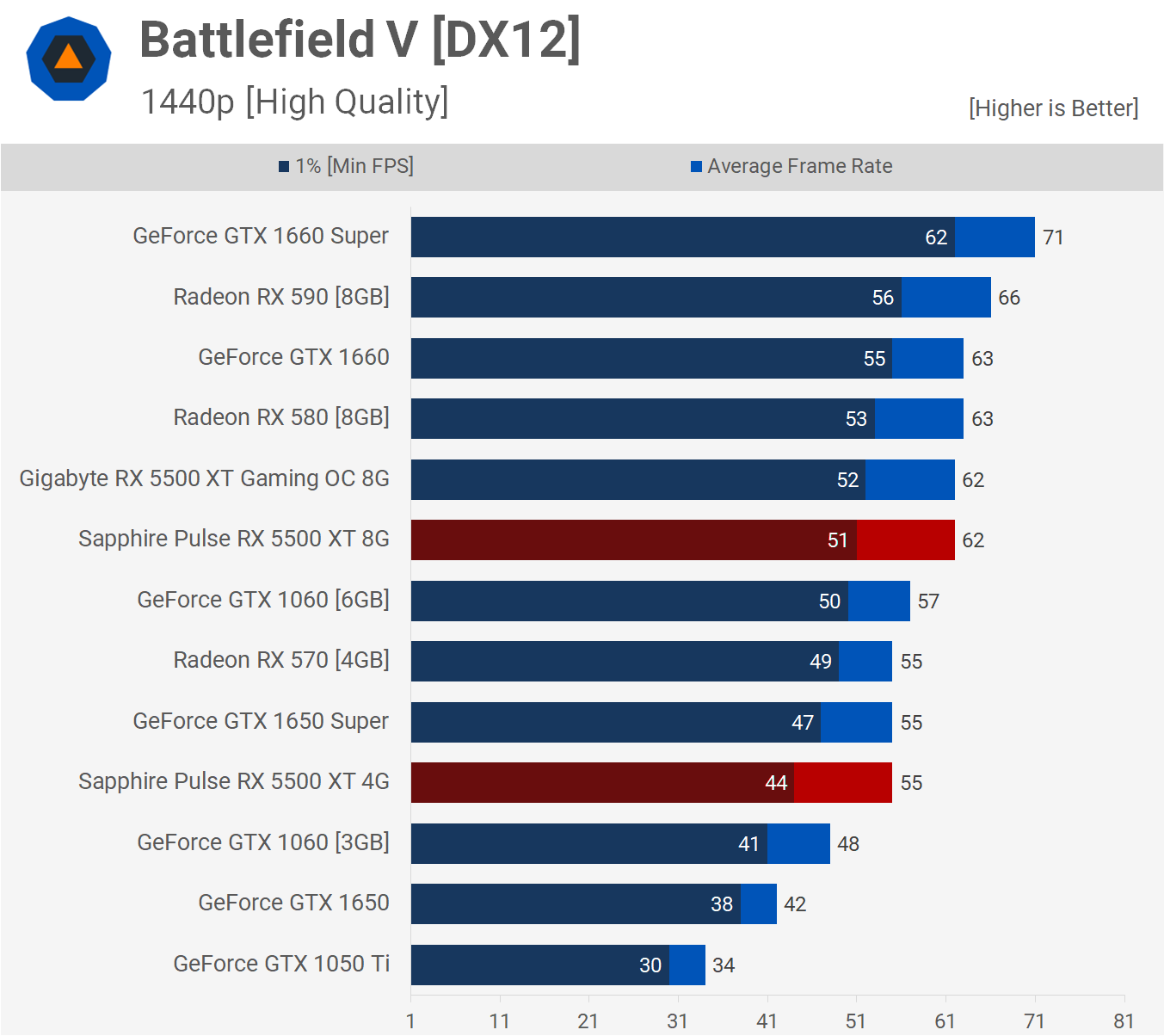

However, the story changes at 1440p where the 4GB 5500 XT struggles and the average frame rate drops by 11%. This means the 5500 XT was now only able to match the 1650 Super and RX 570.

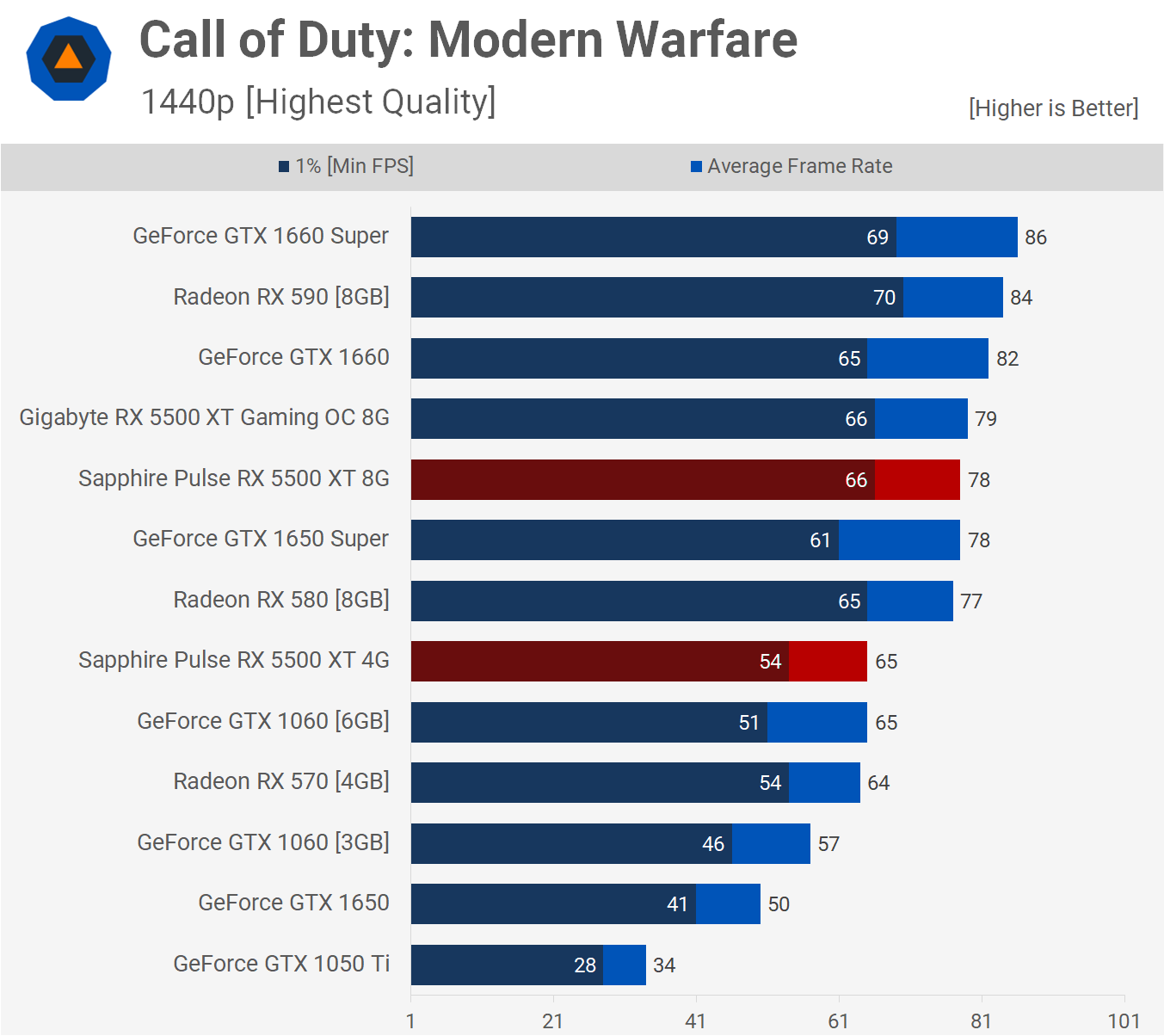

Call of Duty: Modern Warfare is memory hungry even at 1080p, though we are using the highest possible quality settings. The 4GB 5500 XT averaged an impressive 96 fps, but that was still 15% behind the 8GB version, as well as Nvidia's GTX 1650 Super.

The drop off at 1440p was just as harsh. Here the 4GB version was 17% slower and again the 4GB 1650 Super didn't suffer the same fate which is interesting. Once again you're looking at RX 570-like performance with the 4GB 5500 XT in this title.

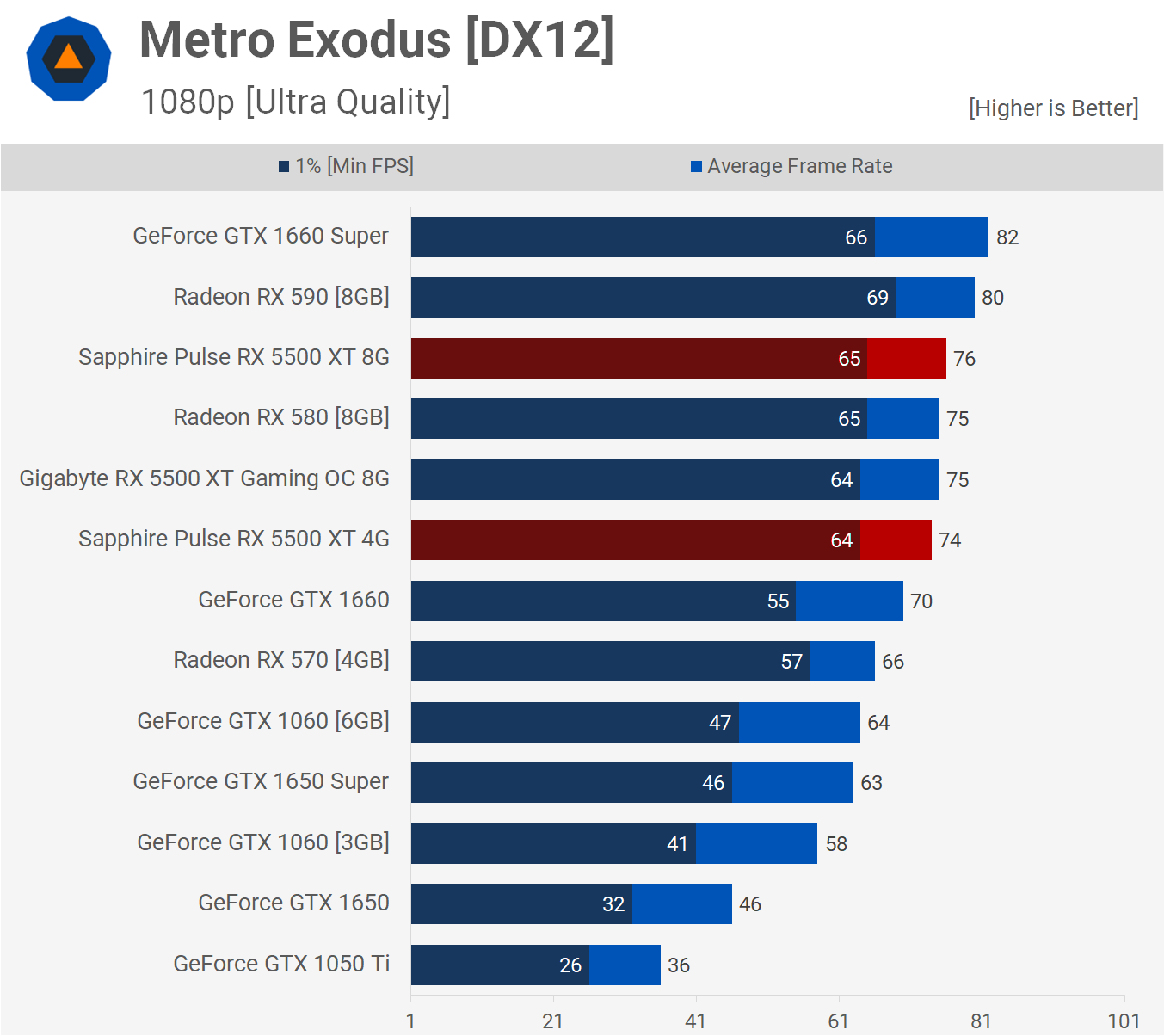

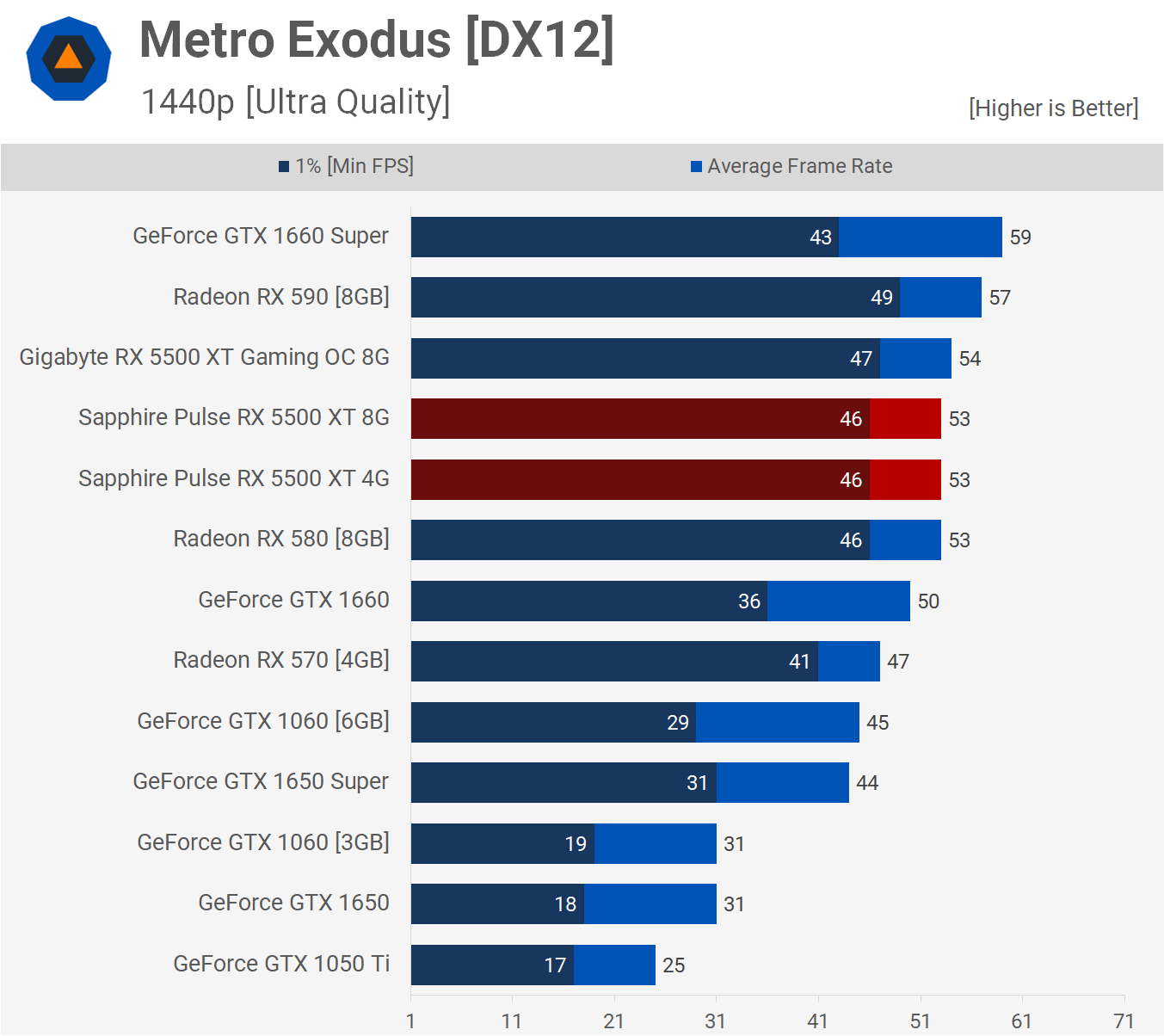

Testing with Metro Exodus using the ultra quality settings, the 4GB 5500 XT hangs in there rather well, matching the 8GB version as well as the 8GB RX 580. We see much the same at 1440p and this time the 4GB 5500 XT was miles faster than the GTX 1650 Super, producing ~20% more frames on average.

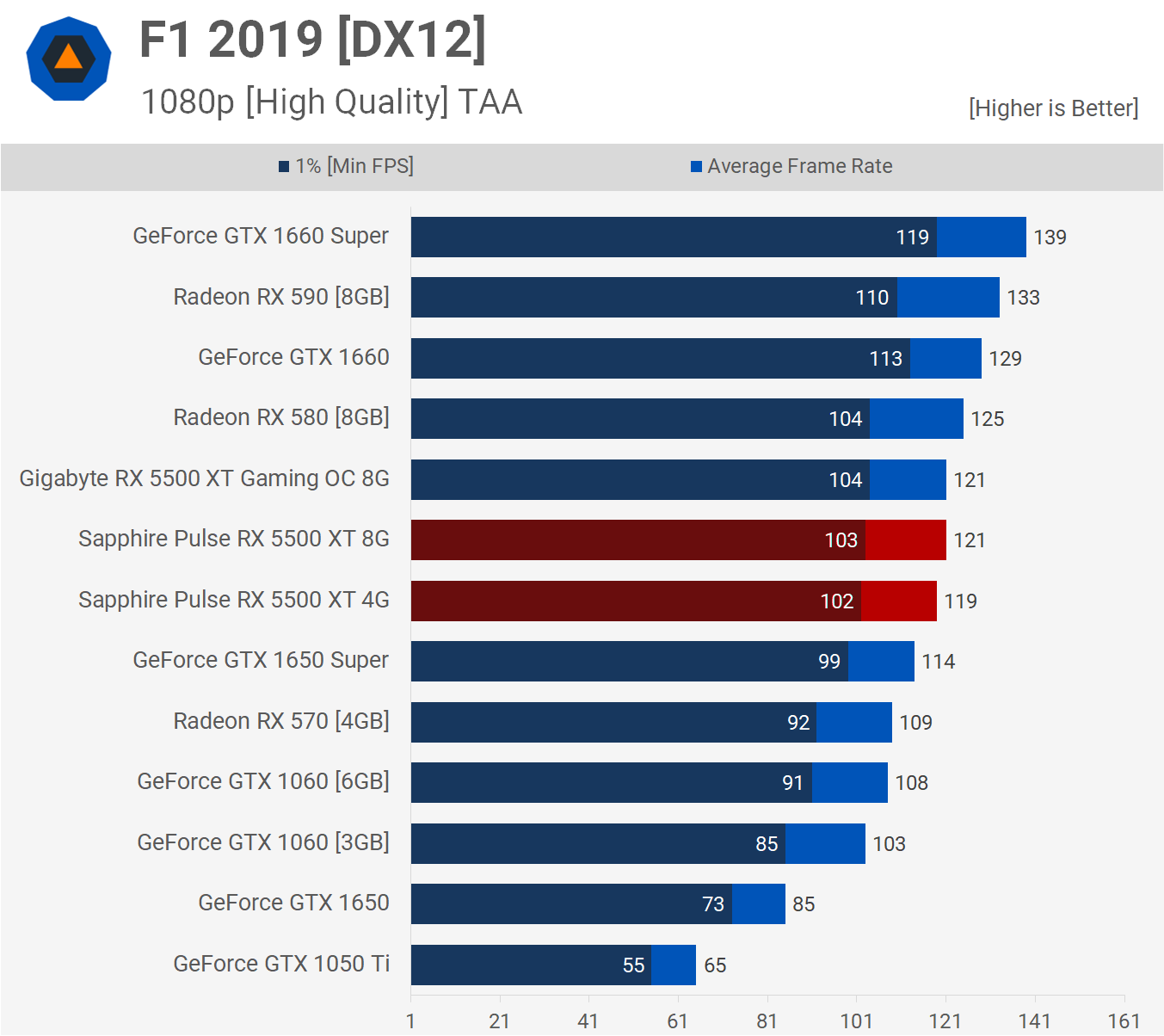

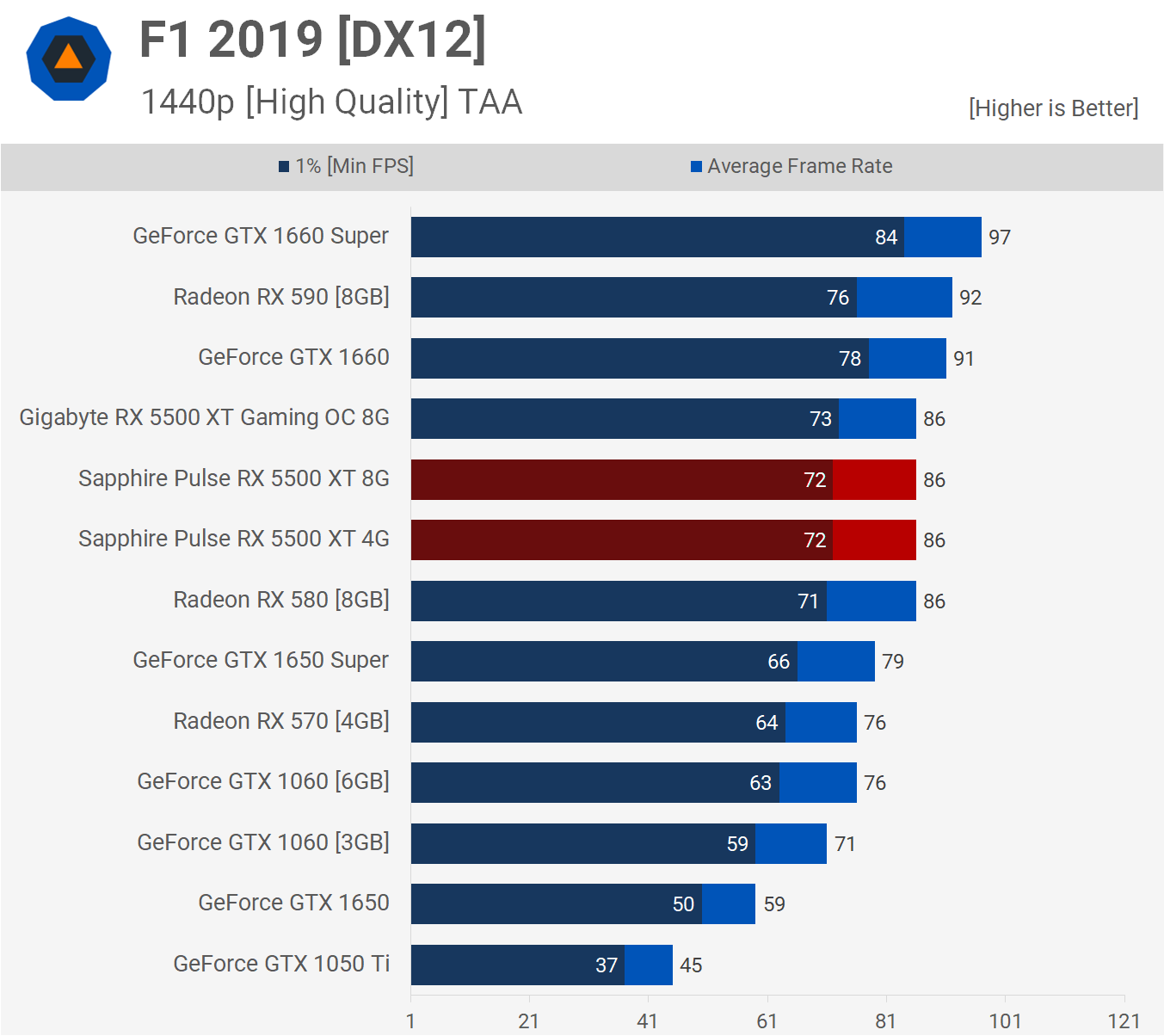

F1 2019 isn't memory intensive and as a result the 4 and 8GB versions of the 5500 XT enabled the same level of performance at 1080p. It's the same situation at 1440p, where the 4GB 5500 XT was good for 86 fps on average, the same performance you'll receive from the RX 580.

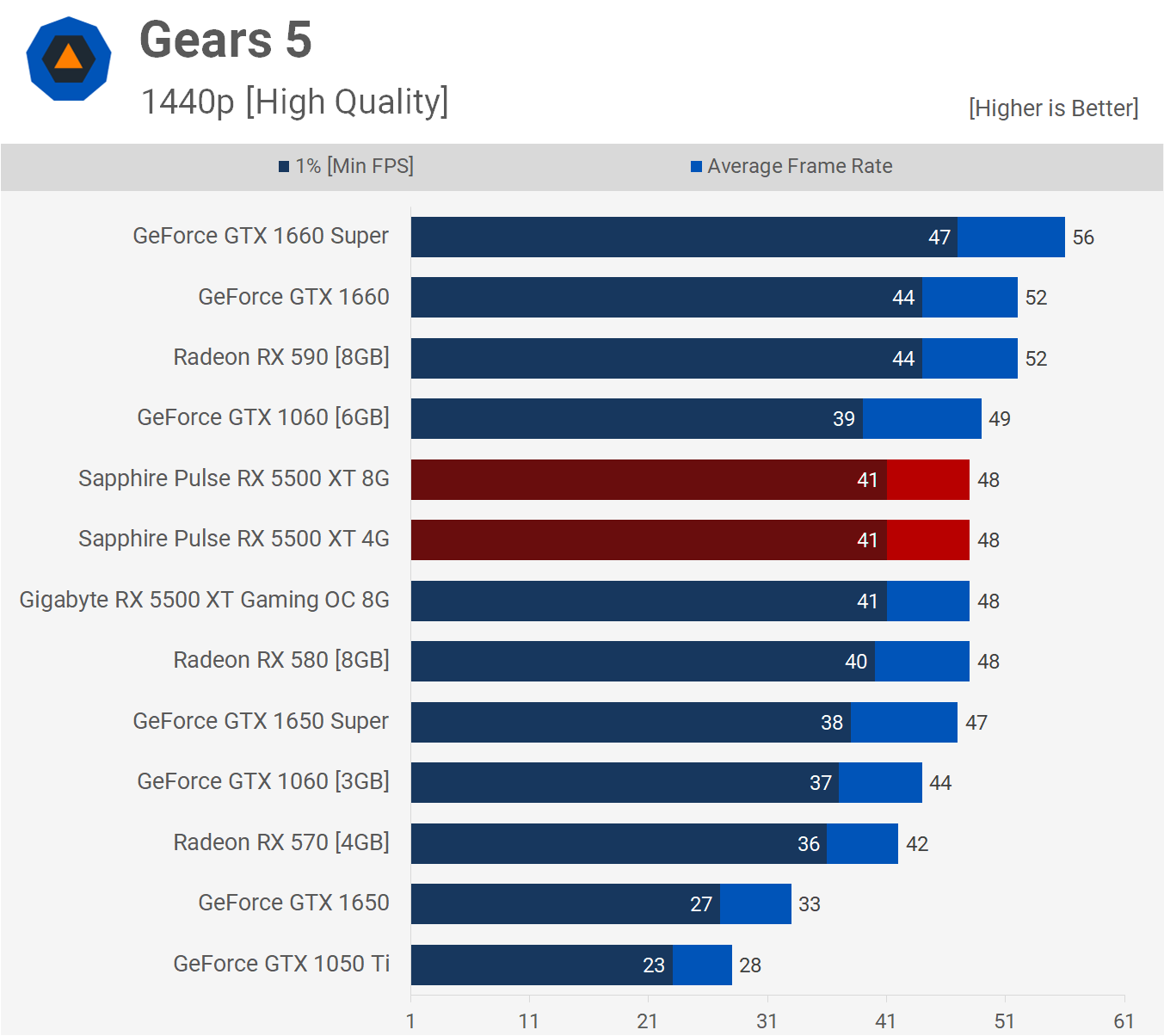

Last up we have Gears 5, where we see no performance difference between the 4 and 8 GB versions of the 5500 XT. At 1440p, both models spat out 48 fps, which is the same level of performance delivered by the RX 580 and GTX 1650 Super.

Performance Summary

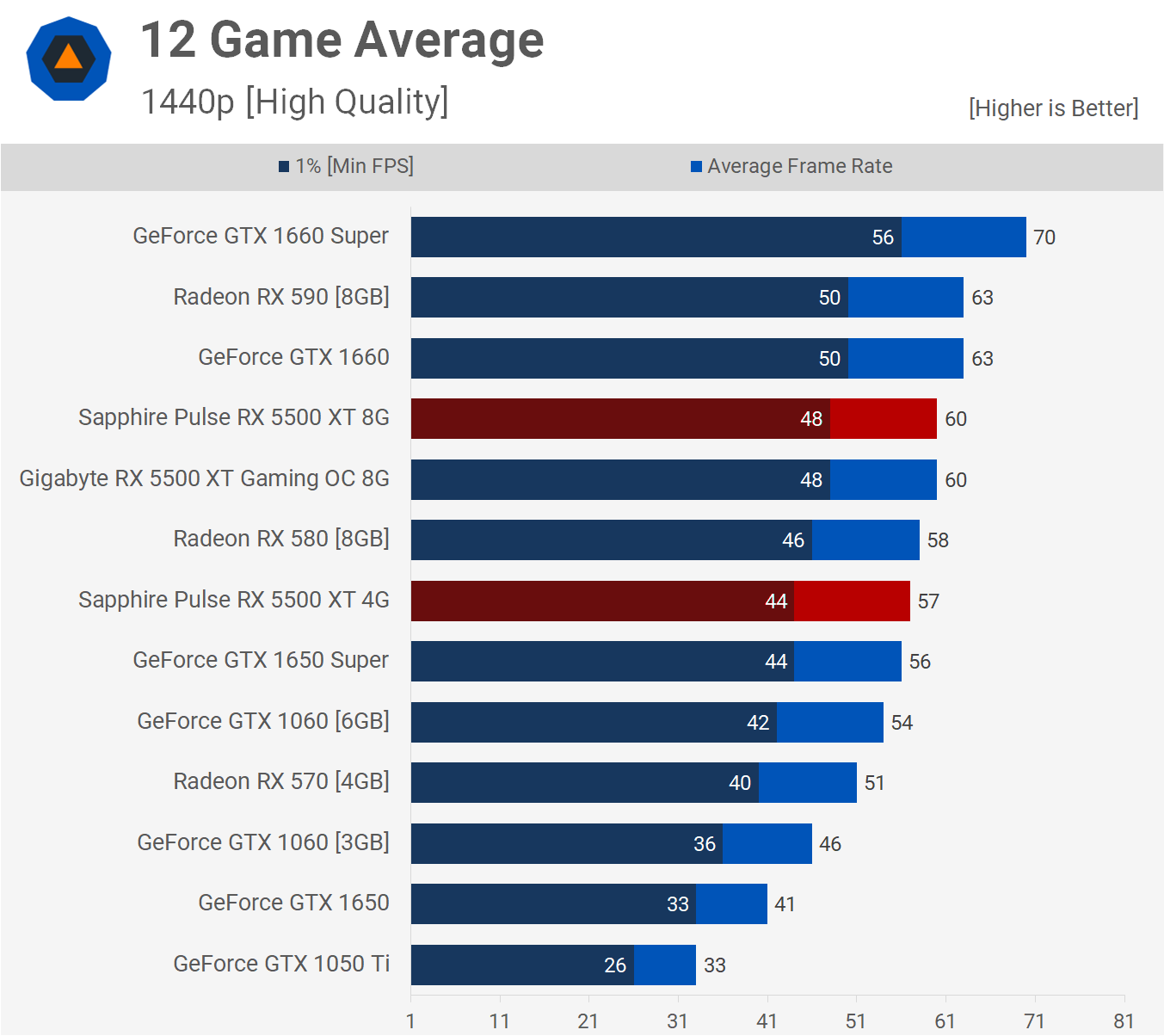

Having looked at a dozen games tested at 1080p and 1440p, it's time to break all that data down. Let's start by looking at the average performance on those 12 titles. These graphs don't tell the whole picture, but we'll quickly take a look...

Looking at these results can be misleading as it doesn't show when and how you can run into trouble with the more limited 4GB VRAM buffer. But overall, when taking all the games into account, the 4GB 5500 XT was only ~3.5% slower than the 8GB version and that placed it on par with the RX 580 and GTX 1650 Super.

We see a similar story at 1440p, this time the 4GB 5500 XT was 5% slower on average when compared to the 8GB model, and again matched the RX 580 and GTX 1650 Super.

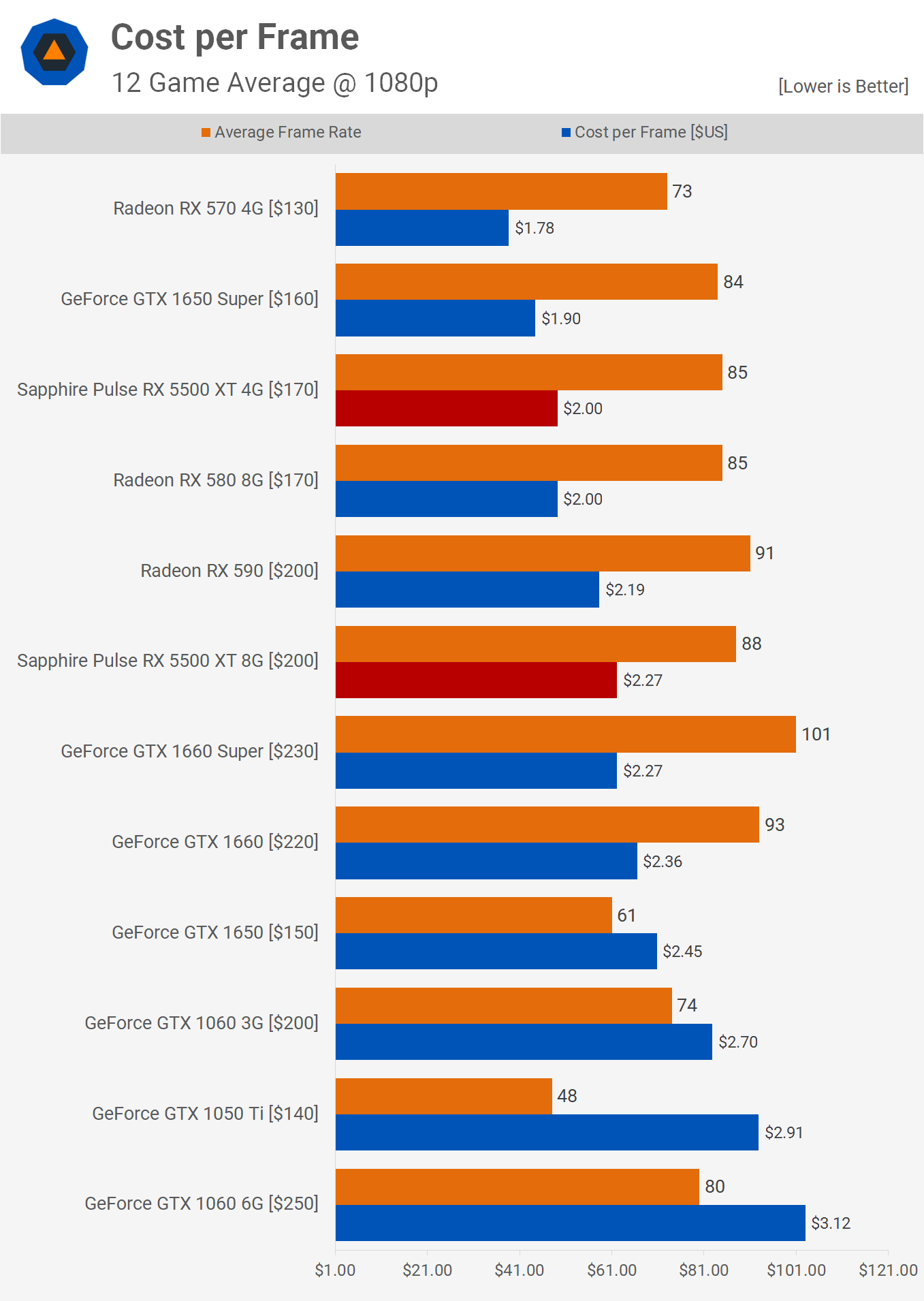

Taking the averages from the 1080p data we see that the 4GB 5500 XT is able to match the cost per frame of the RX 580, so you're trading half the VRAM for a more efficient product and we'll discuss this in a moment.

For now the takeaway is that the GeForce GTX 1650 Super comes in 5% cheaper per frame, which comes from the marginal ~$10 discount on MSRP.

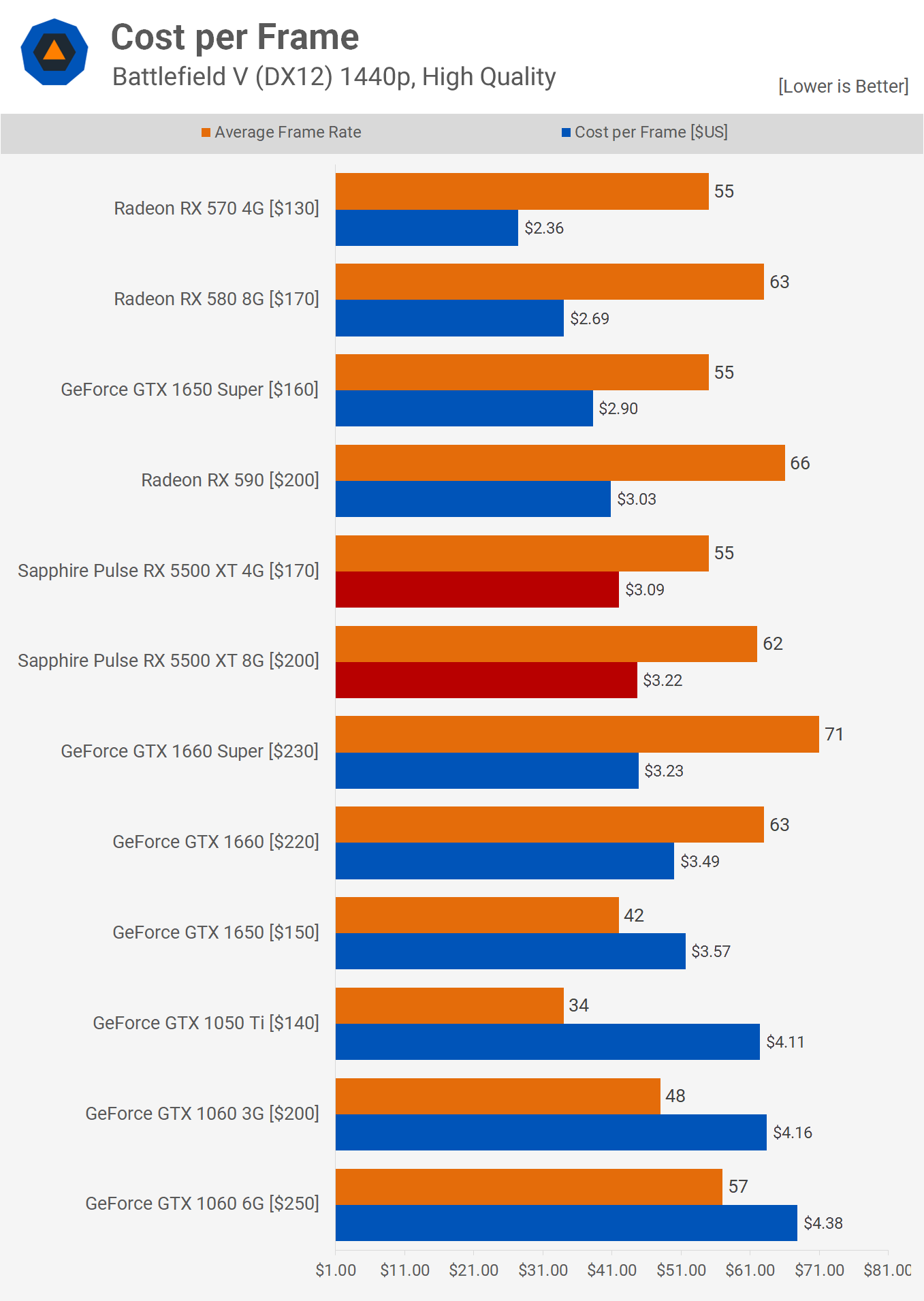

However things get worse for the RX 5500 if we look at a single title like Battlefield V. We're using the 1440p data here because it represents a situation where the 4GB VRAM capacity starts to impact performance considerably, something you'll no doubt see more of in the next year or two.

Where the difference between 4GB and 8GB of memory can be seen, the Radeon RX 580 is miles better in terms of value and the RX 570 is in another class, delivering the same performance as the 4GB 5500 XT at a 24% discount per frame. This is probably the most telling graph we've looked at so far when evaluating the value of the 5500 XT.

Wrapping things up we have a few RX 5500 XT 4GB vs. 8GB graphs that show the margins on a per game basis. Here you can see that the 4GB model struggled in Call of Duty Modern Warfare, Shadow of the Tomb Raider and Red Dead Redemption 2, while we saw small performance drop offs in Far Cry New Dawn, Assassin's Creed Odyssey and Metro Exodus, though they were so small in those titles it's really not worth talking about.

If we look at 1% low performance we see similar margins for the most part, though the 4GB version had a harder time in Assassin's Creed Odyssey.

At 1440p we see no difference between the 4GB and 8GB models for half the games tested, at least when comparing average frame rate performance. The performance drop in Call of Duty was big, we also see a bit of a drop in Assassin's Creed and double digit performance loses in Battlefield V and Shadow of the Tomb Raider.

The 1440p 1% low data is the most telling graph for future performance. We see little to no impact for half the games tested, but the other half all see double digit hits to the frame rate with three titles dropping by more than 15%.

Power Efficiency or More VRAM?

AMD's late arrival with their mainstream Navi offerings may have to do with a 7nm supply issue. They're not in a position where they can sell them in significant enough quantities to justify being competitive on price. As things stand right now, the GeForce GTX 1650 Super is a more compelling buy when compared to the 4GB 5500 XT. Overall, you're looking at basically the same level of performance, at a very small discount. Looking at our small benchmark sample of games, the GTX 1650 Super's performance seemed more consistent, not suffering the larger performance hits in titles such as Battlefield V and Call of Duty Modern Warfare due to the more limited 4GB memory buffer.

If you're after an efficient budget graphics card, the GTX 1650 Super looks like the way to go. However, in a recent poll we asked what gamers preferred: power efficiency or VRAM capacity, and as we suspected, an overwhelming number of you picked memory capacity as your preference.

That being the case, most of you spending less than $200 on your graphics card may not be interested in the 4GB 5500 XT at $170 or the 4GB GTX 1650 Super at $160, when you can purchase an 8GB RX 580 for $170, sometimes as low as $150. The old Radeon uses substantially more power, but who cares? -- we wouldn't -- and it seems a good portion of you agree.

It's worth noting that idle power consumption is about the same, so while the RX 580 has the potential to run a hotter and louder when gaming, having that extra headroom with the memory seems more important for most. And that means, as long as you can purchase the 2+ year old discounted RX 580 8GB graphics card, for the same amount of money as these newer 4GB GPUs, that's what we'd do. Had AMD priced the 8GB 5500 XT at no more than $170 and the 4GB model at around $140, then we feel they'd be viable options, but that just doesn't seem possible given the current supply constraints.

4GB vs. 8GB VRAM

Before wrapping up, let's talk a little more about the 4GB vs. 8GB memory buffers...

There's a lot of debate around this topic. Many people claim 4GB just isn't enough and graphics cards shouldn't be selling with such a limited memory capacity in 2019, while others claim 8GB is still overkill for 1080p gaming. In our opinion, neither is right or wrong, it just depends, mostly on what you plan on playing and how you plan on playing it.

Now, we do agree that $200 for a tiny GPU with a 128-bit wide memory bus in late 2019 is too much, regardless of memory capacity, so we're certainly not attempting to justify RX 5500 XT or GTX 1650 Super pricing, we're simply saying how much VRAM you require depends.

For example, if you're a competitive gamer like myself who typically doesn't care about an immersive single player experience with amazing graphics, then VRAM capacity doesn't really matter. For example, when I play Battlefield V, textures and most other quality settings aren't cranked up to ultra, instead almost all are set to low which sees VRAM usage drop from around 5 GB down to just 3 GB, and frankly the game still looks good.

... for someone wanting to play Fortnite, spending more money to secure a card with more VRAM is just a waste of money.

It's the same for other games I play such as Fortnite, Apex Legends and StarCraft II, all those games are played with mostly low quality settings, it just makes it easier to spot enemies and removes a lot of distracting effects. I'm not alone here either, this is how most gamers play esports/competitive titles, even those that aren't all that demanding to begin with.

In other words, for someone wanting to play Fortnite, spending more money to secure a card with more VRAM is just a waste of money.

But if you are not into esports titles and prefer to play games like Assassin's Creed Odyssey and Shadow of the Tomb Raider for their stunning visuals and story mode, then ensuring that you have a graphics card with headroom in the VRAM department is more important and probably worth paying a small premium for.

If you're this sort of gamer, then you will want to avoid 4GB models like the plague, especially given the upcoming Xbox Series X will have 16 GB of GDDR6 memory with 13GB accessible to games. Expect the next generation of games to see a big step up in memory usage.

If you're a gamer that enjoys single-player mode and stunning visuals, then you will want to avoid 4GB models like the plague, especially given the upcoming Xbox Series X will have 16 GB of GDDR6 memory with 13GB accessible to games. Expect the next generation of games to see a big step up in memory usage.

With all of that said, we want to note that not having enough VRAM isn't the disaster that some make it out to be. It's not close to say, having a dual-core processor and playing a game that requires a quad-core or better, in that scenario you're pretty much screwed and no matter how low you drop the quality settings, you're going to have a bad time.

With limited VRAM, all you have to do is drop texture quality settings down and you're away. Sure, the game might not look as good, but it's still going to be playable and in a make-do scenario that's all you can hope for. Also how a title handles insufficient VRAM will depend on the game. Some turn into a slide show until you reduce memory usage, others won't even let you use the higher quality settings (Red Dead Redemption 2), and some will automatically reduce or remove textures entirely, often leaving you with a blurry mess.

Providing a more affordable option with less memory for casual or competitive gamers isn't a bad thing, you just don't want to be paying near $200 for a cut down option right now. As we said before, had the 4GB 5500 XT come in at ~$140 then it would have been a pretty solid deal, but as things stand today, you're better off with an 8GB RX 580, end of story.

Shopping Shortcuts:

- AMD Radeon RX 580 on Amazon

- AMD Radeon RX 590 on Amazon

- AMD Radeon RX 5500 XT on Amazon

- GeForce GTX 1650 Super on Amazon

- GeForce GTX 1660 Super on Amazon

- GeForce GTX 1660 Ti on Amazon

- GeForce RTX 2060 Super on Amazon

- AMD Radeon RX 5700 XT on Amazon

- AMD Radeon RX 5700 on Amazon

- GeForce RTX 2070 Super on Amazon

- AMD Ryzen 5 3600 on Amazon